Picasso

Picasso: Robotic Painter

Humphrey Yang, Hang Wang, Advisor: Jose Pertierra-Arrojo, Ardavan Bidgoli

Role: Pipeline implementation, documentation, simulation, physical testing

We explore and develop a framework of using Kinect and industrial robots for human-machine interactions. With the multifunctional Kinect sensor, the robot becomes more human-like and mobile as it does not require fixed-position cameras. In environments where an accurate digital model of the space is difficult to acquire or when the immutability of the camera position/orientation can not be guaranteed, our system will outperform the externally placed sensor configurations. We also speculated that by using the robotic arms, the representation of data, especially 3D geometries, do not require the translation into 2D and loses its depth information.

In this project, we create an interactive experience using a industrial robot (ABB 6640, 6DOF + rail) installed with a Kinect (V2). Different from previous works, the robot’s workflow does not require predefined objects. Instead, it relies on its sensor and program to identify objects-of-interest. We leverage this property to create a drawing robot that will log user gestures and draw it on a piece of canvas. The robot is not limited to a fixed observation point, but will observe the user from different angles, hence the name Picasso. After reading the gestures, the robot will then find a drawable object (styrofoams) on the table and draw on it.

We implement our project with Rhinoceros and Grasshopper, with HAL to communicate to the robot and Tarsier to stream Kinect sensor data. The 3D environment of Rhinoceros provides robust representation of and versatile manipulability over 3D geometries, while Grasshopper provides us with a parametric computation pipeline.

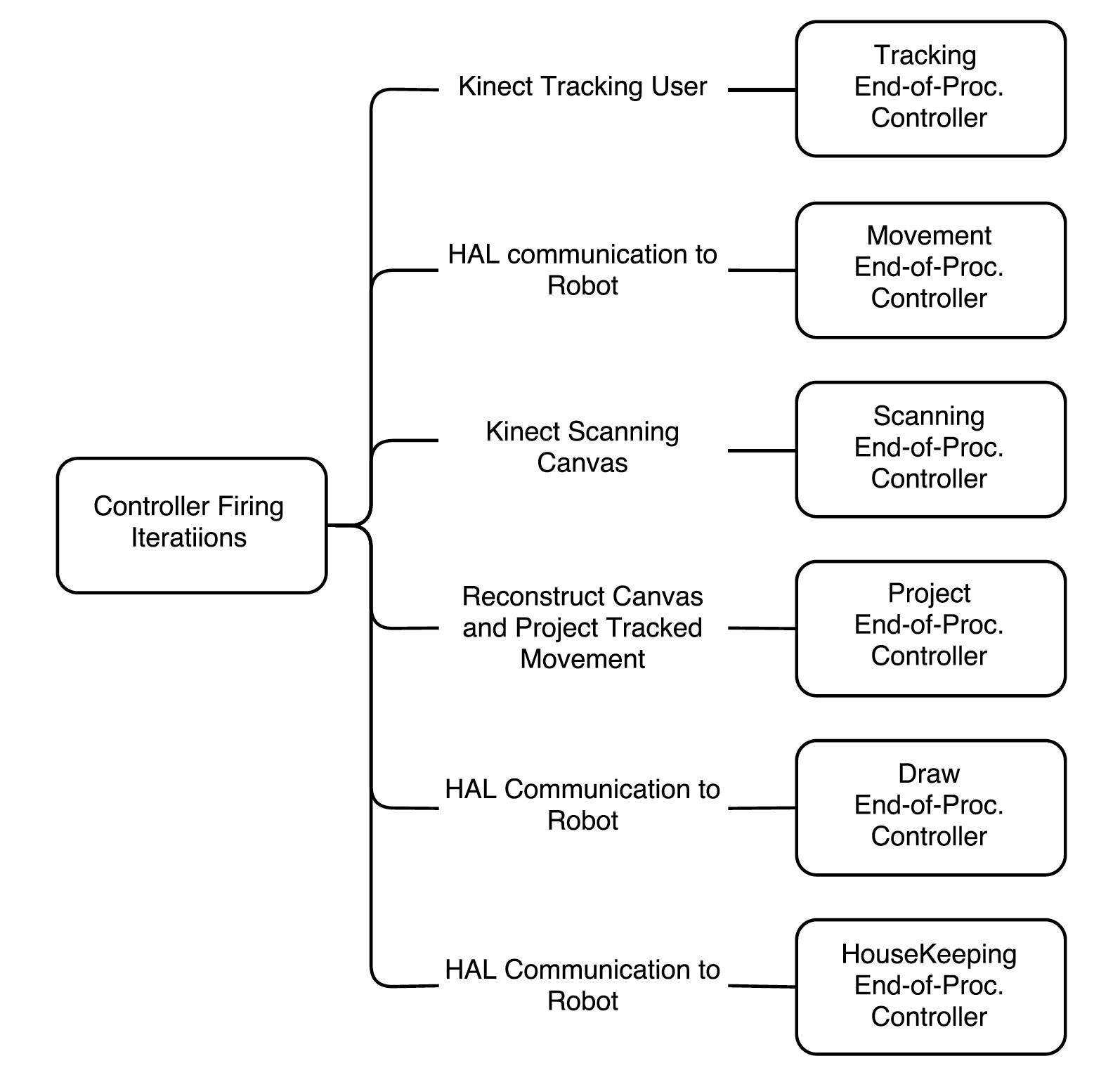

We use GH Python to implement the controller. Note that only one instance of controller was created in the workflow, and it is passed around during execution. The Controller fires at a preset interval and determines which procedure to call. Procedures are implemented with Grasshopper components. Upon completion of a procedure, the controller at the end of the procedure script will record and evaluate the computed data, determine the next procedure to call, and refire itself.

We test the interactive workflow both in the simulation (with RobotStudio) and on the robot. In these experiments, the user will move its arms to create a gesture, then the robot will replay this gesture on the canvas. The canvas objects are not predefined, so the operator can swap in a new piece in between drawings.